This blog summarizes our work of error bounds of imitating policies and environments, which is presented at NeurIPS 2020.

In our NeurIPS 2020 paper error bounds of imitating policies and environments [1], which is a joint work with Tian Xu and Yang Yu, we consider the imitation learning tasks. Recall that the goal of imitation learning is to obtain a high-quality policy by mimicking expert demonstrations. Various methods such as behavioral cloning (BC) [2], apprenticeship learning [3][4], generative adversarial imitation learning (GAIL) [5] has been proposed and empirically compared. The interesting empirical result is that GAIL often performs well than others in practice. To better understand this, we study the error bounds of these methods. Informally, our main results are

- BC suffers a quadratic error bound w.r.t. the effective planning horizon (a.k.a., the compounding error issue [6]; while GAIL could enjoy a linear error bound w.r.t. the effective planning horizon due to the state-action distribution matching.

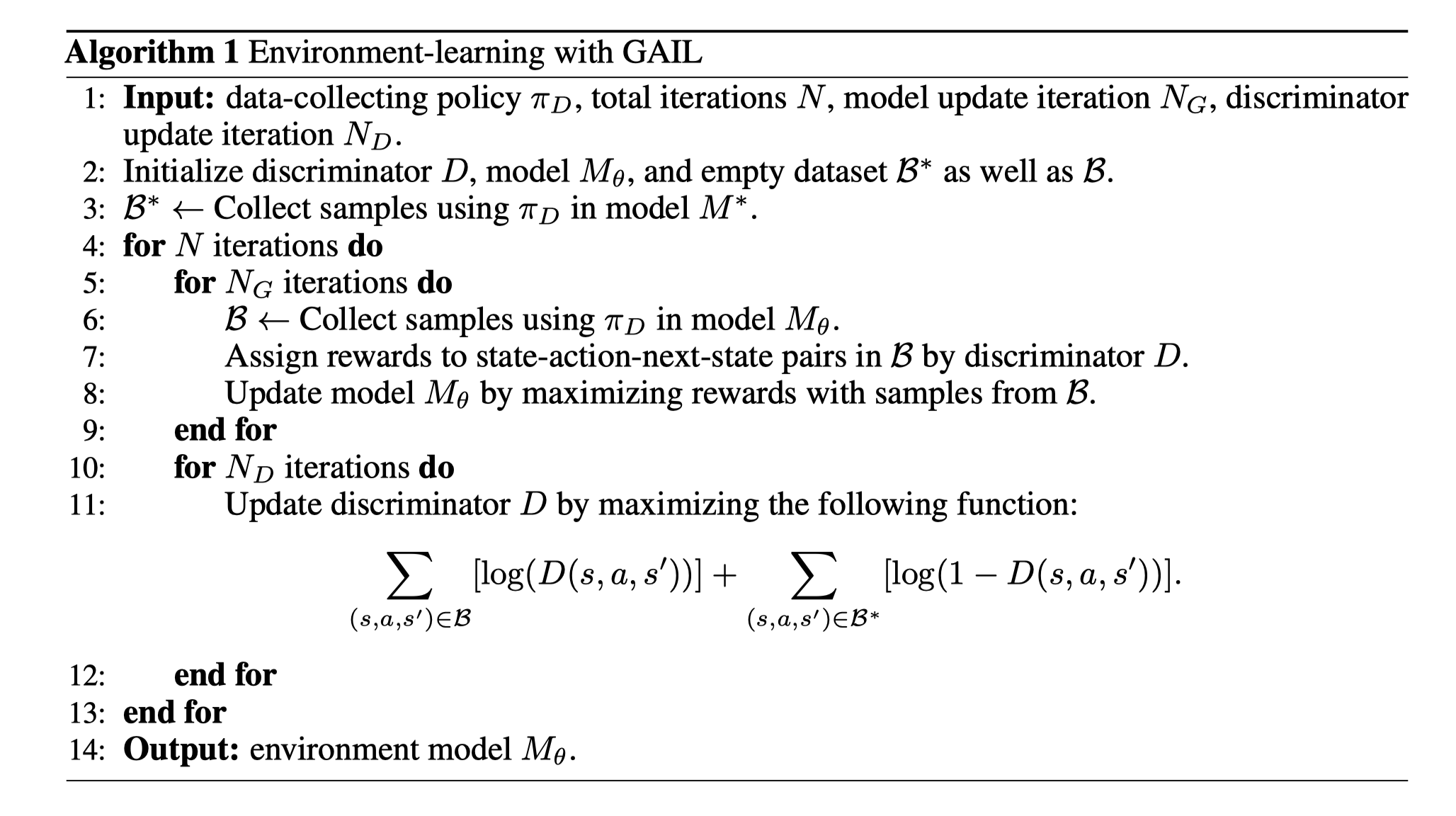

- Based on results from imitating policies, we show that if we apply GAIL to imitate environments, which is often in model-based reinforcement learning (MBRL), the policy evaluation error could be reduced to be linear w.r.t. model bias. This suggests a novel application of GAIL-based algorithms to recover the transition in MBRL.

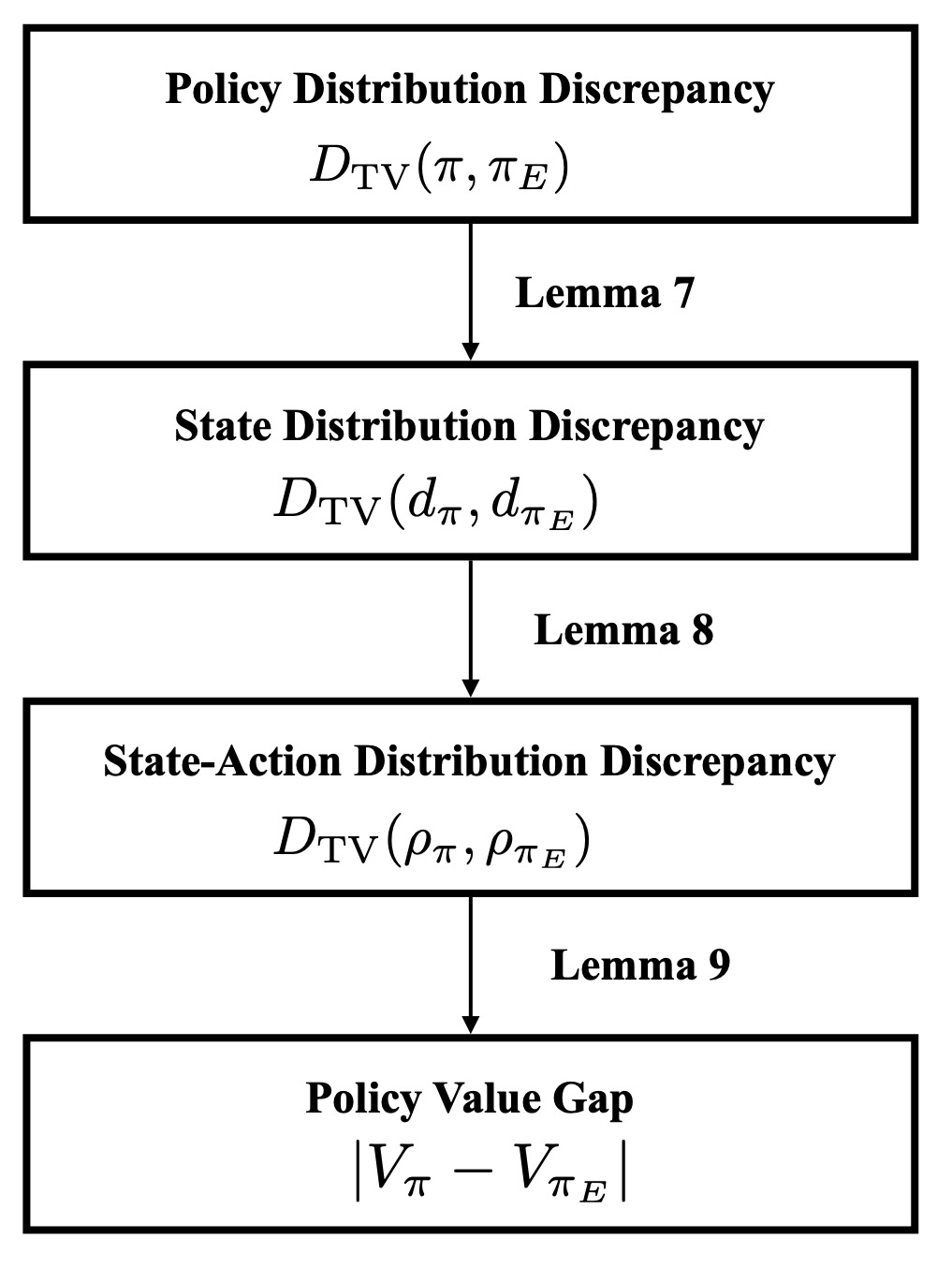

The above results are based on the error propagation analysis. The framework is shown in the following figure.

Under this framework, we see that the error-propagation path of BC (i.e., minimizing policy distribution discrepancy) is longer; hence, it enjoys a larger error bound. In contrast, GAIL is to directly minimize state-action distribution discrepancy and consequently, its error bound is smaller.

As mentioned before, based on this insight, we could apply GAIL to imitate environments. In this way, the policy evaluation error is small in this empirical environment due to state-action-next-state distribution matching.

[1] Xu, Tian, Ziniu Li, and Yang Yu. "Error Bounds of Imitating Policies and Environments." NeurIPS 2020.

[2] Pomerleau, Dean A. "Efficient training of artificial neural networks for autonomous navigation." Neural computation 3.1 (1991): 88-97.

[3] Abbeel, Pieter, and Andrew Y. Ng. "Apprenticeship learning via inverse reinforcement learning." ICML 2004.

[4] Syed, Umar, and Robert E. Schapire. "A game-theoretic approach to apprenticeship learning." NeurIPS 2008.

[5] Ho, Jonathan, and Stefano Ermon. "Generative adversarial imitation learning." NeurIPS 2016.

[6] Ross, Stéphane, and Drew Bagnell. "Efficient reductions for imitation learning." AISTATS 2010.